Part of the reason I think this article deserves special attention is that the shortcomings of statistical databases in India are rarely reported, but the numbers from these databases are frequently used in newspaper and other media reports to buttress important policy arguments. In my work on the Supreme Court I found numerous such instances of miscounting and categorization. A journal article I wrote on the workload of the Indian Supreme Court had to have several pages discussing the limitations of the data the Court collects. For example, thousands of Supreme Court admission matters are effectively counted twice – once as a defective unregistered matter and the second time as a cured registered matter. In the Supreme Court’s Annual Report though they are all just lumped together under the category of admission matters. It’s only if you dig more and are lucky enough to see the monthly statements on which the annual report is based that you discover this peculiar accounting method. However, the public – like in the case of the NCRB data – would have no idea how to correctly interpret the published data if they just read what the government releases.

Or take another example. The Supreme Court releases a publication called Court News which is suppose to give the public (and the government) the definitive accounting of the current status of the Indian judicial system. Every quarter it releases data on the institution, disposal, and backlog of cases in the Supreme Court, High Courts, and Subordinate and District courts. You will see these numbers (particularly the backlog numbers) quoted widely in the media. But what are they counting and not? You might think, well, they must be counting everything that’s a matter somewhere in the judicial system. Well, yes and no. The issue is when it comes to tribunals. Thousands and thousands of cases go to all sorts of tribunals – tax, service, environment, etc. The trouble is that some of these matters are counted in the subordinate court numbers and others are not. Basically, if the administrative chain of command of the tribunal is to the High Court then it reports its numbers and they are eventually tallied in Court News under subordinate courts. If they don’t report (administratively) to the High Courts, then these numbers are not counted anywhere in Court News. As a result, we don’t really know how many cases are pending in the Indian judicial system because a whole bunch of matters are missing from these larger tallies in Court News and no one has gone around to collect them independently. But again, you wouldn’t realize this from reading Court News.

An even greater problem with the Court News data is that we don’t know how many of these matters in the judicial system are even contested. In 1925 the British released the Rankin Committee report on the status of the judiciary in India. They found that, for example, only 10% of cases in the Bengal courts were contested. In the almost 90 years since, as far as I know, there has been no publicly available report on how many cases are contested or uncontested in India. It likely isn’t that uncontested cases account for 90% of the caseload today as they did in 1925, but uncontested matters probably do account for a very large number of cases – think about all the uncontested traffic tickets or other minor cases that go through the lower courts with no challenge. The point is that one would need to know the number of contested vs. uncontested matters to have a general sense of what the workload of the courts are today. Even better would be to know when cases are being filed and how long it takes them to be resolved. Either way, this isn’t available publicly.

Now I don’t think that NCRB or the Indian Supreme Court releases data that is easy to misinterpret because they are trying to be malicious or fool the public. I think instead it’s because those in government who collect data do so in a rote way. They are told to tally cases and pass them on to their superiors. Their superiors are often collecting data in whatever way was in place before they got to their position and they don’t have the time or inclination to change the data collection systems. Over the years I have met several people within the system who have recognized these types of problems and are trying to make the necessary changes, but they are fighting institutional momentum and there are few rewards for them even if they do succeed.

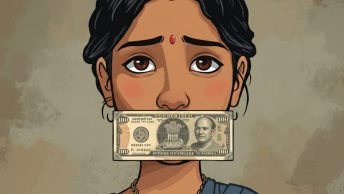

Given the current and highly visible challenge India is facing with sexual violence, it makes news when a good reporter uncovers that the NCRB rape numbers are off because of an accounting issue, but the large majority of instances of fuzzy data released by the government won’t grab widespread attention (I am under no illusion that the Indian media will suddenly find it a worthy story to report that the Indian Supreme Court has been sloppy in how it publishes data on admission matters). Yet, informed public debate requires data that is both reliable and understandable. As Vrinda Grover suggests in the Hindu article what is needed is greater transparency and access to the government databases on which publicly released data is based. India has a growing number of scholars who have the skills and inclination to sort through raw government data to see where errors or misinterpretations might be creeping into the publicly released results. It’s time that such communities of scholars – and the public more broadly – are tapped for this task. It will go a long way in making government more legible and so more accountable.

This methodology also ensures that domestic and sexual violence against men goes totally unreported in NCRB database, as such violence against men is not a criminal offense in India. This fact is often missed by the media and the feminists alike. Maybe we really need to have comprehensive victimization surveys as suggested by the expert committees. Such surveys, carried out in other countries have revealed a surprisingly high rate of incidence of violence against men.